Preliminaries:

Given two modules $B,C$ we seek a module $A$ such that $B$ contains an isomorphic copy of $A$ such that resulting quotient module $B/A$ is isomorphic to $C$.

Clearly, $B$ contains an isomorphic copy of $A$ is same as saying that there is an injective homomorphism $\psi:A \rightarrow B$. This can be expressed as

\begin{equation}

A \equiv \psi(A) \subset B

\end{equation}

To say $C$ is isomorphic to quotient means that there is a surjective homomorphism $\phi: B\rightarrow C$ with $ker\;\phi=\psi(A)$.

This gives us a pair of homomorphisms

\begin{equation}

\label{eq:hom}

A \xrightarrow{\psi} B \xrightarrow{\phi} C

\end{equation}

such that $im\;\psi=ker\;\phi$.

These homorphisms such that above holds are known as "exact".

Examples:

Using direct sum of modules $A,C$ with $B=A \oplus C$, the following exact sequence can be constructed.

\begin{equation}

0 \rightarrow A \xrightarrow{i} A \oplus C \xrightarrow{\pi} C \rightarrow 0

\end{equation}

where $i(a)=(a,0)$ and $\pi(a,c) = c$. Notice that $pi \circ i=\pi(a,c)\circ(a,0)=\pi(a,0)=0$. Thus $\partial^2=0$ map is satisfied.

When $A=Z$ a $Z$ module with $C=Z/nZ$, above sequence becomes

\begin{eqnarray}

0 \rightarrow Z \xrightarrow{i} Z \oplus Z/nZ \xrightarrow{\pi} Z/nZ \rightarrow 0 \\

0 \rightarrow Z \xrightarrow{n} Z \xrightarrow{\pi} Z/nZ \rightarrow 0

\end{eqnarray}

If we consider $A=Z$ and $C=Z/nZ$, we can consider these as extension of $C$ by $A$.

For homomorphism $\phi$, we may form the following.

\begin{equation}

0 \rightarrow K \xrightarrow{i} F(S) \xrightarrow{\phi} M \rightarrow 0

\end{equation}

Here $\phi$ is a unique $R$-module homomorphism which is identity in $S$ - set of generators for $M$ an $R$-module.k

Saturday, August 8, 2020

Cohomolgy-Some Module theory

Friday, July 31, 2020

K-Algebras and Bimodules

Note that scalar commutes with ring elements.

Examples:

- Field extensions such as $F/E$.

- Polynomial ring $k[X,Y,Z]$.

- Matrix $M_{nm}(k)$ ring (under addition and multiplication) is $k$ algebra. Here we can see that $k$ can commute with elements of $M_{nm}(k)$ but the ring multiplication is non-commutative.

- The set $Hom_k(V,V)$ of $k$-linear maps of $k$ vector spaces forms a $k$-algebra under addition and composition of linear maps.

Finite dimensional $k$ algebra it is a finite dimensional vector space over $k$.

Note ${\cal C}$ is 2 dimensional over ${\cal R}$ etc.,

Bimodules

- $M$ is a left $R$ module, and a right $S$ module.

- for all $r \in R$, $s \in S$ and $m \in M$ \begin{equation}

\label{eq:rs}

(rm)s=r(ms)

\end{equation}

For positive intergers $m,n$, the set of $n \times m$ matrices $M_{nm}(\mathcal{R})$. Here the $R$-module is $n \times n$ matrices $M_{nn}(\mathcal{R})$. And the $S$-module is $m \times m$ matrices $M_{mm}(\mathcal{R})$.

Addition and multiplication are carried out using the usual rules of matrix addition and matrix multiplication; the heights and widths of the matrices have been chosen so that multiplication is defined.

The crucial bimodule property, that $(rx)s = r(xs)$, is the statement that multiplication of matrices is associative.

A ring $R$ is a $R-R$ module.

For $M$ an $S-R$ bimodule and $N$ a $R-T$ bimodule then $M \otimes N$ is a $S-T$bimodule.

Bimodule homomorphism:

For $M,N$ $R-S$ bimodule, bimodule homomorphism $f:M \rightarrow N$ is an right $R$ module homomorphism as well as an right $S$ modules homomorphism.

An $R-S$ bimodule is same as left module over ring $R \otimes_Z S^{op}$ where $S^{op}$ is opposite ring of $S$. Note in opposite ring multiplication is performed in opposite direction of the original ring.

This caused me some confusion initially. $S^{op}$ is a ring with multiplication reversed. Denote multiplication in the righ $S$ by "." and opposite multiplication by "*". So, how does all this work to define a bimodule?

Lets look at $R \otimes_Z S^{op}$ operating on $m \in M$.

\begin{equation}

r s*m = r m.s = (rm)s = r(ms)

\end{equation}

Thus the definition is satisfied. Using $R \otimes_Z S^{op}$ is more nicer.

Tuesday, July 28, 2020

Associative algebras-some preliminary notes

Associative algebras are generalizations of field extensions and matrix algebras. For example, in field extension $E/F$,$E$ can be considered a $F$-algebra of dimension $n$. Also a $F$-vector space.

An associative algebra $\mathcal{A}$ is a ring, (with multiplication associative) with scalar multiplication and addition from a field $F$.

$K$-algebra means an associative algebra over field $K$.

In short, we want the $F$ action to be compatible with multiplication in $\mathcal{A}$. Say $f \in F$ and $a,b \in \mathcal{A}$ then

\begin{equation}

(f.a)b=f.(ab)=a(f.b)

\end{equation}

We may consider $F$ as a subring under identification $f \rightarrow f.1_A$ where $1_A$ is multiplicative identity. Then, in compatibility condition noted above we can drop the dot in between $F$ elements and $\mathcal{A}$ elements

\begin{equation}

fab = afb

\end{equation}

which is same as saying $fc=cf$ for some $c=ab$. Hence, this implies that $F \in Z(\mathcal{A})$ - that is in center of $\mathcal{A}$.

Examples:

A standard first example of a $K$-algebra is a ring of square matrices over a field $K$, with the usual matrix multiplication.

Let $F=Q$, the field of rationals. Consider the polynomial $X^2-2 \in Q[X]$. The splitting field $E=Q(\sqrt{2})$. Then $Q(\sqrt{2})=\{a+b\sqrt{2}|a,b\} \in Q$ is a vector space of $F$ over $E$ such that $dim_F(E)=2$.

Note, that an $n$-dimensional $F$-algebra $A$ can be realized as a subalgebra of $M_n(F)$ ($n\times n$ matrices over field $F$).

If $A,B$ are $F$-algebras, they can be added and multiplied via tensor operations. That is $A \otimes_F B$ and $A \oplus B$ are also associative algebras.

If $A$ is an algebra of dimension $2$, then $A \equiv F \oplus F$. This means $A$ is quadratic extension of $F$, or $A$ contains a nilpotent element.

To prove this, first we establish commutativity of $A$ using basis $\{1,\alpha\}$ over $F$.

To see this simply expand $(x+y\alpha)(x'+y\alpha')$

Quadratic extension requires that every non-zero element of $A$ should be invertible.

Say $x+y\alpha$ be a non-zero element that is not invertible. This means $y \neq 0$ and can assert $\alpha$ is not invertible.

$A$ can be represented via subalgebra of $M_2(F)$. So, we write $\alpha$ as

\begin{align*}

\alpha = [[a,b],[c,d]] \text{ a 2 by 2 matrix }

\end{align*}

Using above it is not too difficult to prove that $A \equiv F \oplus F$.

Opposite algebras $A^{opp}$ is an algebra where multiplication is in reverse order. That is for $a,b \in A$, with $a.b$ as multiplication in $A$ and using $\times$ symbol for multiplication in $A^{opp}$, the condition is $b \times a = a.b$.

Sunday, July 26, 2020

Cohomology-Homotopy operator etc.,

Homotopy equivalent manifolds have isomorphic de Rahm cohomology groups.

Suppose $F,G:M\rightarrow N$ are smooth homotopic maps. Suppose $\omega$ is a $k$ form on $N$ and $h$ be an homotopic operator that maps from space of $k$ forms on $N$ to $k-1$ forms on $M$ given by

\begin{equation}

d(h\omega)+h(d\omega)=G^{*}(\omega)-F^{*}(\omega)

\end{equation}

This means $h:\mathcal{A}^k(N) \rightarrow \mathcal{A}^{k-1}(M)$.

There is deRahm theorem proof of which I shall blog later.

Saturday, July 25, 2020

Cohomology- Homotopy

Your plan or device has the following steps.

1. Put a peg on the ground at a random place. Tie a rope.

2. Walk in some direction for some time unrolling your rope, assuming that you haven't hit a rock, plant another peg where you tie other end of the rope.

3. Pause and recognize this rope as a curve on the surface and name it $f$.

4. Walk a few feet and repeat the same steps - if you are successful unrolling your rope, you have another curve - call is $g$.

Now if you can drag rope $f$ to rope $g$, you don't have a rock in between otherwise you have an obstruction or a rock!

If you can drag $f$ to $g$ (or vice versa), you call it $f ~ g$ and this basic idea of homotopy.

Now while dragging the rope from $f$ to $g$, you are working along a map $F$ which at start (we call the start as $t=0$ as a parameter for the drag), the map should be $f$ and at the end (say $t=1$) $F$ should be $g$.

This notion is formalized as follows. Let $M,N$ be two manifolds and let $f,g:M\rightarrow N$ be two smooth functions. If there is a $C^\infty $ map

\begin{equation}

F: M \times R \rightarrow N

\end{equation}

such that $F(M,0) = f$ and $F(M,1)=g$, then we say $f$ is homotopic to $g$ and write $f \tilde g$.

Now that we have such a map, we can add extra nomenclature when certain conditions occur. Let $N=\mathcal{R}^n$. $F:M\times \mathcal{R} \rightarrow \mathcal{R}^n$ linear in $t$ can be expressed as

\begin{equation}

F(M,t) = f(1-t)+(t)g

\end{equation}

When $t=0$ implies $F(M,0)=f$ and when $t=1$, $F(M,1)=g$.

Notice $F(M,t)=f+(g-f)t$ which is like $y=mx+c$ or a straight line equation in terms of $t$. Such a straight line path is called "Straight line homotopy".

Convex means that any arbitarary two points can be joined by a straight line. On any subset of $\mathcal{R}^n$ for which this is true, straight line homotopy is applicable.

Sometimes we are fortune enough to get maps $f,g$ where $g:N \rightarrow M$ such that $f\circ g$ is identity on $N$ and $g \circ f$ is identity on $M$. In such situations, $M$ is considered to be "homotopy equivalent" to $N$. $M,N$ are said to be same homotopy type.

Notice, nothing precludes $N$ to be a single point set. For example, if $M=\mathcal{R}^n$ and $N$ is a single point set, we can smoothly scrunch $\mathcal{R}^n$ to a single point. Such manifolds that can be shrunk to a point are called "contractible".

Friday, July 24, 2020

Cohomology-Computations 1

Each arc $X,Y$ is diffeomorphic to an interval and thus to $\mathcal{R}$.

Here instead of writing one forms and seeking existence of integral solutions, we can use Mayer-Vietoris sequence to make computations. The Mayer-Vietoris sequence for $S^1$ is as follows:

\begin{equation}

\label{eq:MV1}

0 \rightarrow H^{0}(M) \xrightarrow{i^*} H^{0}(U) \oplus H^{0}(V) \xrightarrow{j^*} H^{0}(U \cap V) \xrightarrow{d^*} H^1(M) \rightarrow 0.

\end{equation}

Using dimensional formula for sequence of vector spaces $\sum_{k=0}^n(-1)^k d^k$, we can figure out dimension of $H^1(M)=d^1=1$ as follows:

\begin{equation}

1 - 2 + 2 - d^1 = 0

\end{equation}

Since $S^1$ is connected, $H^0(S^1)=\mathcal{R}$. As shown before $H^0(U)=H^0(V)=\mathcal{R}$. Since overlaps are disjoint we have $\mathcal{R} \oplus \mathcal{R}$. All this results in the following sequence.

\begin{equation}

\label{eq:MV2}

0 \rightarrow \mathcal{R} \xrightarrow{i^*} \mathcal{R} \oplus \mathcal{R} \xrightarrow{j^*} \mathcal{R} \oplus \mathcal{R} \rightarrow 0

\end{equation}

Notice $j^*:H^{0}(U) \oplus H^{0}(V) \xrightarrow{j^*} H^{0}(U \cap V)$ is given as follows. Since, we are dealing with $0$ dimensional space, corresponding vectors are $0$ dimensionals - that is scalars or real numbers.

\begin{equation}

j^*(m,n) = (n-m,n-m)

\end{equation}

That is these are elements of diagonal in $\mathcal{R}\times\mathcal{R}$. $d^*: H^{0}(U \cap V) \xrightarrow{d^*} H^{1}(M)$ sends this to one dimensional space which is isomorphic to $\mathcal{R}$, hence points $m \neq n$ will result in this element. Among many, we can choose one.

Thursday, July 23, 2020

Cohomology-Mayers-Vietoris Long exact sequence.

\begin{equation}

0 \rightarrow \Omega^{*}(M) \xrightarrow{i} \Omega^{*}(U) \oplus \Omega^{*}(V) \xrightarrow{j} \Omega^{*}(U \cap V)\rightarrow 0

\end{equation}

for some open cover $U,V$ of manifold $M$, yields a Long exact sequence in cohomology called 'Mayer-Vietoris' sequence.

\begin{equation}

\cdots H^{k-1}(U \cap V) \xrightarrow{d^{*}} H^{k}(M) \xrightarrow{i^{*}} H^k(U) \oplus H^k(v) \xrightarrow{j^{*}} H^{k}(U \cap V) \xrightarrow{d^{*}} H^{k+1}(M)\cdots

\end{equation}

In this complex, $i^{*},j^{*}$ are induced from $i,j$. Since $H^k$ is quotient, the elements of $H^k$ are in cohomoloous classes. If we take a representative element $\sigma \in \Omega^{*}(M)$, then the map $i$ sends this element to $i\sigma$. Based on this, one can define $i^{*}$ as follows:

\begin{equation}

i^{*}([\sigma]) = ([i\sigma]) = ([i^{*}_U\sigma],[i^{*}_V\sigma])\in H^k(U)\oplus H^k(V)

\end{equation}

Do similar thing to $j^{*}$, that is drop in equivalence classes instead of differential forms directly.

\begin{equation}

j^{*}([\omega],[\tau]) = ([j^*_V\tau-j^*_U\omega]) \in H^k(U \cap V)

\end{equation}

To make all this work, we need a connecting homomorphism map $d^*$ defined as follows:

\begin{equation}

d^*[\eta]=[\alpha] \in H^{k+1}(M)

\end{equation}

Since for $k \leq -1$, $\Omega^{k}(M)=0$, the sequence can be written as,

\begin{equation}

0 \rightarrow H^{0}(M) \rightarrow H^0(U) \oplus H^0(v) \rightarrow H^{0}(U \cap V) \rightarrow H^{0}(M) \rightarrow \cdots

\end{equation}

For a connected manifold, above sequence is exact.

\begin{equation}

0 \rightarrow H^{0}(M) \rightarrow H^0(U) \oplus H^0(v) \rightarrow H^{0}(U \cap V) \rightarrow H^{0}(M) \rightarrow 0

\end{equation}

Tuesday, July 21, 2020

Cohomology-Partition of Unity

Idea behind POU is as follows. Imagine a set of continuous functions defined on a topological space that takes values in the closed interval [0,1]. They are sort of functions that finitely many of them take non-zero values in a small neighborhood and vanish (become 0) in other places.

So how is all the above formalized? Given the niceness of topological space, we can defined these functions in the following manner.

1. Take an open cover $U_i$ of manifold $M$ such that at each point $x$ there

are finitely many open covers $U_i$.

2. Take a family of differential functions $0 \leq \beta_i(x) \leq 1$ such that sum is unity at point x.

Such a family ${\beta_i(x)}$ define on ${U_i}$ called a POC subordinate to ${U_i}$.

3. These functions vanish beyond the ${U_i}$.

How's this useful?

On manifolds, integration of the forms can be done on a coordinate patch. POU helps extend this coordinate patch integration to whole manifold.

For an orientable manifold $M$, take a volume form $\omega$ at a point $p$.

\begin{equation}

\omega = h(p) dx^1 \wedge dx^2 \wedge \cdots dx^n

\end{equation}

with postive definite $h(p)$ on a chart $U_i$ whose coordinate is $x=\phi(p)$.

Let $f:M \rightarrow R$ be a function on $M$. In the coordinate neighbourhood $U_i$ one can define integration of $n$-form as

\begin{equation}

\int_{U_i}f = \int_{\phi_i(U_{i})} f(\phi^{-1}_i(x))h(\phi^{-1}_i(x))dx^1 \cdots dx^n

\end{equation}

POU enables us to extend this integration over entire manifold.

Example: On $S^1$ , use open covers $U_1=S^1 - (1,0)$ and $U_2=S^1-(-1,0)$ to define "bump" functions

$\beta_1(\theta)=sin^2(\frac{\theta}{2})$ and $\beta_2(\theta)=cos^2(\frac{\theta}{2})$.

Since values of $sin$ and $cos$ belong to interval $[0,1]$ and sum of $\beta_1,\beta_2$ is always unity, it is easy to see that this a POU.

This is a partition of Unity because,

1. $\beta_i(\theta) \in [0,1]$ for $i=1,2$.

2. if $p=(1,0)$ as $p \notin U_1$ results in value of function $0$. Similarly,

for $U_2$, at $p=(-1,0)$, value is $0$.

3. At any angle where both the functions are defined, POU acts as a weight that allocates a fraction to one function and rest of the fraction to next function. In our example, say we take $\theta = \pi/4$, $\beta_i(\pi/4)=1/2$, hence sum of $1/2+1/2=1$.

We can use this to integrate - say $\int_{S^1} cos^2\theta$. Let us see what happens. Since POU acts as a weighting function that sums to $1$, we can allow overlaps in the integration. POU will allocate appropriate weight and make sure that the integration works as expected.

\begin{equation}

\int_{s^1}cos^2(\theta)d\theta = \int_0^{2\pi}sin^2(\theta/2)cos^2(\theta)d\theta + \int_{-pi}^{pi} cos^2(\theta/2)cos^2(\theta)d\theta = \pi

\end{equation}

Monday, July 20, 2020

Cohomology: Mayers-Vietoris sequence.

Define the following inclusion maps

\begin{eqnarray}

i_u:U \rightarrow M, i_u(p) = p \\

i_v:V \rightarrow M, i_v(p) = p \\

j_U:U \cup V \rightarrow U, j_u(p) = p \\

j_V:U \cup V \rightarrow V,j_v(p) = p

\end{eqnarray}

These inclusion map $i_U(p)=p$ from $U$ to $M$ and similar map $i_v(q)=q$ from $V$ to $M$ etc.,

Based on these inclusion maps one can define pull back maps of differentials

\begin{equation}

i^{*}_U:\Omega^{k}(M) \rightarrow \Omega^{k}(U)

\end{equation}

Similarly one can define a pull back for $i_V$

\begin{equation}

i^{*}_V:\Omega^{k}(M) \rightarrow \Omega^{k}(V)

\end{equation}

Similar pull back maps are defined for $j_U,j_v$.

\begin{eqnarray}

j^*_U:\Omega^k(U \cup V) \rightarrow \Omega^k(U) \\

j^*_V:\Omega^k(U \cup V) \rightarrow \Omega^k(V)

\end{eqnarray}

By restricting to $U$ and to $V$, we get a homorphism of vector spaces

\begin{equation}

i:\Omega^{k}(M) \rightarrow \Omega^k(U) \oplus \Omega^k(V)

\end{equation}

defined via

\begin{equation}

\sigma \rightarrow (i^{*}_U\sigma,i^{*}_V\sigma)

\end{equation}

Using this, define the difference map,

\begin{equation}

j:\Omega^k(U) \oplus \Omega^k(V) \rightarrow \Omega^k(U \cap V)

\end{equation}

by $j(\omega,\tau)=\tau-\omega$.

This map indicates what to do with common vectors that belong to both $U$ and $V$. Similar maps are used in Finite dimensional vector spaces to prove dimensionality theorem when $U,V$ are subspaces whose intersection is non-empty.

Here $\tau,\omega$ are pull backs maps shown before.

\begin{eqnarray}

\omega = j^*_U\omega \\

\tau = j^*_v \tau

\end{eqnarray}

$j$ is a zero map when $U \cup V = \emptyset$.

Proposition

For each integer $k \geq 0$, the sequence

\begin{equation}

0 \rightarrow \Omega^k(M) \xrightarrow{i} \Omega^k(U) \oplus \Omega^k(V) \xrightarrow{j} \Omega(U \cap V) \rightarrow 0

\end{equation}

is exact.

Proof:

To show that this exact sequence, we need to show at each node image of previous function to this node is same as kernel from this node to next node.

We will start with first node - $\Omega^k(M)$.

$0$ vector maps every function to $0$ in the $\Omega^k(M)$ which is in kernel of $i$. Hence, $im\;(0\rightarrow \Omega^k(M))=ker\;i$.

To prove exactness at $\Omega^k(U \cup V)$, we need to show that $j$ is surjective or onto as next maps takes everything to zero. Thus kernel of next map is all of $\Omega^k(U \cap V)$ which is range of $j$.

We are already given $j$ map in the previous section. This map, together with a very nice partitions of unity, helps us to establish the onto of $j$ map.

say $\omega \in \Omega^k(U \cap V)$. Let $p_U,p_v$ be functions that form partitions of unity. Define

\begin{eqnarray}

p_U\omega = \begin{cases}

p_v \omega \text{ when }, & x \in U \cap V \\

0 \text{ otherwise },& x \in U - (U \cap V)

\end{cases} \\

p_V\omega = \begin{cases}

p_U \omega \text{ when }, & x \in U \cap U \\

0 \text{ otherwise },& x \in V - (U \cap V)

\end{cases}

\end{eqnarray}

The niceness of partition of unity allows the following to happen.

\begin{equation}

j(-p_u\omega,p_v\omega)=p_v\omega+p_u\omega=\omega \text{ on } U \cap V

\end{equation}

This shows that $j$ is onto and from the fact the next function sends everything to $0$, we have proved that this is a short exact sequence.

Sunday, July 19, 2020

Cohomolgy-Long exact sequence

- Cochain map $\phi:H^k(A)\rightarrow H^k(B)$ induced cohomology map

\begin{equation}

\phi^{*}:H^k(A) \rightarrow H^k(B)

\end{equation} - For short exact sequence of cochain complexes \begin{equation}

0 \rightarrow \mathcal{A} \xrightarrow{i} \mathcal{B} \xrightarrow{j} \mathcal{C} \rightarrow 0

\end{equation}

Connecting homomorphism map is

\begin{equation}

d^{*}:H^k(\mathcal{C}) \rightarrow H^{k+1}(\mathcal{A})

\end{equation} - Then the short exact sequence of cochain complexes

\begin{equation}

0 \rightarrow \mathcal{A} \xrightarrow{i} \mathcal{B} \xrightarrow{j} \mathcal{C} \rightarrow 0

\end{equation}

gives rise to long exact sequence in cohomology.

\begin{equation}

\cdots H^{k-1}(\mathcal{C}) \xrightarrow{d^{*}} H^k(\mathcal{A}) \xrightarrow{i^{*}} H^k(\mathcal{B}) \xrightarrow{j^{*}} H^k(\mathcal{C}) \xrightarrow{d^{*}} H^{k+1}(\mathcal{A}) \cdots

\end{equation}

Saturday, July 18, 2020

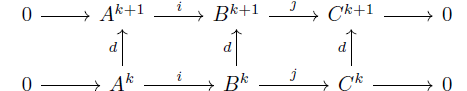

Cohomology-Connecting homomorphisms

\begin{equation}

0 \rightarrow \mathcal{A} \rightarrow \mathcal{B} \rightarrow \mathcal{C} \rightarrow 0

\end{equation}

is ``short exact'' if $i,j$ are cochain maps and for each $k$

\begin{equation}

0 \rightarrow A \xrightarrow{i} B \xrightarrow{j} C \rightarrow 0

\end{equation}

is short exact sequence of vector spaces.

Based on above sequence, we can define yet another new map called ``connecting homomorphism'' map

Since $C$ maps all elements to $0$ and because this is an exact sequence, image of $j$ will be onto. This means there exists an element $b\in B^k$ such that $c=j(b)$.

Because connecting homomorphism gives above commuting diagram, $C^{k+1}$ can be reached via $d(j(b))$ and also via $j(d(b))$. That is,

\begin{equation}

d(j(b)) = j(d(b))

\end{equation}

However, $c=j(b)$. Then, $d(j(b))=d(c)=0$. Thus the element $db (\in B^{k+1}) \in ker\;j$.

d[c] = [a] \in H^{k+1}(A)

\end{equation}

Cohomology of Cochain complex

cochain map:

Between any two cochain complexes $\mathcal{A}, \mathcal{B}$ one can define a cochain map $\phi:\mathcal{A} \rightarrow \mathcal{B}$ - a collection of linear maps $\phi_k:A_k \rightarrow B_k$. If $d_1,d_2$ are corresponding differential operators for $\mathcal{A},\mathcal{B}$, drawing a commuting diagram shows

Friday, July 17, 2020

Vector spaces-First isomorphism theorem

Let $T:V \rightarrow W$ be a linear transformation between vector spaces $V$ and $W$.

Then, $\tau:T/ker(W) \rightarrow Im(W)$ induces an isomorphism given by $\tau(v+ker(T)) = T(v)$.

First we need to establish that if $v+ket(T)$ is replaced by $v'+ker(T)$ for $v,v'$ in the same coset ie $v-v' \in ker(T)$, then $T(v)=T(v')$. That is, we need to establish that above map is well defined.

Notice,

$T(v) = T( (v'-v)+v' ) = T(v-v')+T(v') = T(v')$

Then we need to establish that the map $\tau$ is a linear map.

That is, for $v,v' \in V$, we need to show that $\tau(v+ker(T) + v'+ker(T))=\tau(v+ker(T))+\

Indeed, $\tau(v+ker(T) + v'+ker(T)) = T(v+v'+ker(T)) =T(v)+T(v')=\tau(v+ker(T))+\

And

$\tau(\alpha (v+ker(T))) = \tau(\alpha v + \alpha ker(T) ) = T(\alpha v) = \alpha T(v) = \alpha \tau(v+ker(T))$.

Thus the map T/ker(W)$ is a linear map.

To prove isomorphism, we need to show that this map is one-one and onto.

For one-one, if $\tau(v+ker(T))=0$, need to show that $v+ker(T)=0$ which is a direct result of the observation that if $\tau(v+ker(T))=T(v)=0$, then $v \in ker(T)$.

For onto, just note any element of $im(T)$ can be written as $T(v)$ for some $v \in V$ and thus equal to $\tau(v+im(T))$.

Theorem (Universal mapping property for quotient spaces). Let $F$ be a field, $V,W$

vector spaces over $F$, $T : V → W$ a linear transformation, and $U \subset V$ a subspace.

If $U \subset ker(T)$, then there is a unique well-defined linear transformation

$\tau : V/U → W$ given by $\tau(v + U) = T(v)$.

Thursday, July 16, 2020

Cohomology-exact sequences-1

$A \xrightarrow{f(x)} B \xrightarrow{g(x)} C$

$A \xrightarrow{f} B \xrightarrow{g} C \rightarrow 0$

$B/ker\;g \cong C$.

Wednesday, July 15, 2020

Cohomology product structures and ring structure.

- For a manifold $M$ of dimension $n$, the direct sum is $H^{*}(M) = \oplus_{k=1}^{n} H^{k}(M)$

- Thus $\omega \in H^{*}(M$, can be written as $\omega=\omega_0+\omega_1+\cdots+\omega_n$ where $\omega_{i} \in H^{i}(M)$.

- Product of differential forms defined on $H^{*}(M)$ gives $H^{*}(M)$ a ring structure - called "Cohomology ring".

- Since product of differential forms is anticommutative, the ring is anticommutative.

- Direct sum gives Cohomology ring a graded algebra structure.

- Thus, $H^{*}(M)$ is anticommutative graded ring.

Monday, July 13, 2020

Induced Cohomology maps

$F^{\#}(\omega)=[F^*\omega]$.

Cohomology of Real line.

To start off a fact about differential forms:

Differential forms belong to spaces of Alternating forms - $A^k(M)$. Whenever $k>n$ where $n$ dimension of tangent space at a give point, differential $k$ forms become $0$.

Since $R$ is connected, can conclude, $H^0(R)=R$. Clearly, all two forms are zero as $n=1$. Note, two forms are generated by one forms. Since all two forms are zero, all one forms are closed.

$f(x)dx = dg = g^'(x) dx$

which means,

$g(x) = \int_0^x f(t) dt$

Thus,

$H^k(R) = R$ when $k=0$, and $H^k(R)=0$ when $k>0$.

Sunday, July 12, 2020

Cohomology as measure of connectedness.

Cohomology - futher motivation and definition.

Saturday, July 11, 2020

Cohomology - motivating example

$f_y =\frac{\partial f}{\partial y}$

Sunday, June 14, 2020

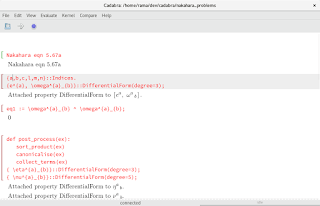

Cadabra Software

There are some very nice tutorials and user notebooks in this site. I installed cadabra on opensuse linux (leap). I could only use their interface cadabra2-gtk. Had issues downloading other interfaces because of incompatibilities of boost library versions Leap is supporting.

cadabra2-gtk launches Cadabra notebook which behaves like Jupyter notebook. There is supposed to be command completion which didn't work for me.

Nice thing about Cadabra is its elegant latex support. Latex is built into commands directly. A sample session is shown below:

Cadabra SW: Applying to some exercises in Nakahara

Tests on differential forms:

Test 1: If $\omega$ is a differential form of odd dimension - say 3, then $\omega \wedge \omega = 0 $. This is the attempt to let Cadbra solve this wedge form.

Nakahara eqn 5.67a

{a,b,c,l,m,n}::Indices.

{e^{a}, \omega^{a}_{b}}::DifferentialForm(degree=3);

0

sort_product(ex)

canonicalise(ex)

collect_terms(ex)

{ \eta^{a}_{b}}::DifferentialForm(degree=3);

\eta^{a}_{b} ^ \nu^{a}_{b}-\eta^{a}_{b} ^ \nu^{a}_{b}

Exercise 5.15: Let $\xi \in \Omega^{q}(M)$ and $\omega \in \Omega^{r}(M)$.

Show that $d(\xi \wedge \omega) = d\xi \wedge \omega + (-1)^{qr} \xi \wedge d\omega$.

For simplicity, we shall set $q=3$ and $r=5$ - thus inducing a negative in the expression.

Using the following link is nice: https://cadabra.science/notebooks/exterior.html

\(\displaystyle{}\text{Attached property DifferentialForm to }\omega.\)

Add definition of exterior derivative.

d{#}::LaTeXForm("{\rm d}").

\(\displaystyle{}\text{Attached property ExteriorDerivative to }d{\#}.\)

ext1 := d{ \xi ^ \omega };

\(\displaystyle{}{\rm d}\left(\xi\wedge \omega\right)\)

product_rule(_);

\(\displaystyle{}{\rm d}{\xi}\wedge \omega-\xi\wedge {\rm d}{\omega}\)

d(\xi) ^ \omega-\xi ^ d(\omega)

This demonstrates equation $5.69$ in Nakahra for even and odd indices.

Chain complexes on Hilbert spaces

Chain complexes are mathematical structures used extensively in algebraic topology, homological algebra, and other areas of mathematics. Th...

-

==Problem 1== Find the multiplication table for group with 3 elements and prove that it is unique. ==Solution== Clear that if \(e,a\) are t...

-

==Problem 2A== Find all components of matrix \(e^{i\alpha A}\) where \(A\) is \begin{equation} A = \begin{pmatrix} 0&&0&&1...